Writing the vernacular: Transcribing and tagging the Newcastle Electronic Corpus of Tyneside English

Joan Beal,

School of English Literature, Language and Linguistics,

University of Sheffield

Karen Corrigan,

School of English Literature, Language & Linguistics,

Newcastle University

Nicholas Smith,

School of English, Sociology, Politics & Contemporary History,

University of Salford

Paul Rayson,

Computing Department,

Lancaster University

Abstract

The Newcastle Electronic Corpus of Tyneside English (NECTE) presented a number of problems not encountered by those producing corpora of standard varieties. The primary material consisted of audio recordings which needed to be orthographically transcribed and grammatically tagged. Preston (1985), (2000), Macaulay (1991), Kirk (1997), Cameron (2001) and Beal (2005) all note that representing vernacular Englishes orthographically, e.g. by using "eye dialect", can be problematic on various levels. Apart from unwelcome associations with negative political, racial or social connotations, there are theoretical objections to devising non-standard spellings which represent certain groups of vernacular speakers, thus making their speech appear more differentiated from mainstream colloquial varieties than is warranted. In the first half of this paper, we outline the principles and methods adopted in devising an Orthographic Transcription Protocol (OTP) for such a vernacular corpus, and the challenges faced by the NECTE team in practice. Protocols for grammatical tagging have likewise been devised with standard varieties in mind. In the second half, we relate how existing part-of-speech (POS)-tagging software (CLAWS4, cf. Garside & Smith 1997; and Template Tagger, cf. Fligelstone et al. 1997) had to be adapted to take account of the non-standard grammar of Tyneside English.

1. Introduction: The NECTE project

NECTE is the result of a pioneering project, which brings together and makes publicly available recordings of Tyneside speakers made at two different periods in the 20th century. Crucially, it also "future-proofs" the data, which means that despite advances in computing technology, access to this resource will always remain possible. As such, it will have the longevity that such a remarkable record of the social and linguistic history of the North East deserves. In this paper, we hope to give a flavour of the process itself and to demonstrate why the resurrection of this neglected material is so important.

The aim of the NECTE project was to enhance, improve access to and promote the re-use of two pre-existing corpora by amalgamating them into a single, Text Encoding Initiative (TEI)-conformant electronic corpus. Part of the data is a legacy from the Tyneside Linguistic Survey (TLS), which, in the decade 1965-1975, attempted to construct an electronic corpus of speakers from Tyneside in North-East England, and to analyse it computationally. We have digitized and restored the viable recordings which survive from the TLS and combined them with those from a more recent dialect survey, the ESRC-funded Phonological Variation and Change in Contemporary Spoken British English (PVC) project, conducted in 1994.

1.1 The Tyneside Linguistic Survey Sub-Corpus

The TLS project was funded by what was then the Social Science Research Council (SSRC), and it originally set out to conduct 150 loosely-structured interviews with people from Gateshead on the south bank of the Tyne. A stratified random sample was selected, and data was collected by means of informal interviews conducted in the participants' homes by a single interviewer, Vincent McNeaney, who was a member of the same community. Speakers were encouraged to talk about their life histories and their attitudes to the local dialect. In addition, at the end of each interview, informants were asked for acceptability judgements on constructions containing vernacular morpho-syntax, and whether they knew or used a range of traditional dialect words. Interviews varied somewhat in length but lasted 30 minutes on average, and were recorded onto reel-to-reel analogue tapes. The TLS project also intended to interview people from the city of Newcastle on the north bank of the Tyne. As far as we know, none of these tapes survive, though phonetic and social data from seven interviews is extant (see Moisl & Maguire 2008).

The TLS project team transcribed the first ten minutes of each interview onto index cards, one of which can be seen in image 1. Index card transcriptions conveyed interviewee turns only and each brief section of audio was annotated for the following types of information:

- Standard English orthography

- a corresponding phonetic transcription of the audio segment

- some associated grammatical, phonological and prosodic details

They also created digital electronic text files containing encoded versions of the phonetic transcriptions as well as separate ciphers conveying grammatical, phonological and prosodic information.

A further set of digital electronic text files were created containing different kinds of encoded social data for each speaker. Not all of this material is extant: the NECTE team has been able to identify 114 interviews, but not all corpus components survive for each. Specifically, there remain:

- 103 audio recordings, of which three are badly damaged (see documentation for the TLS and PVC base corpora). For the remaining 29 interviews, the corresponding analogue tape is either blank or simply missing

- 57 index card sets, all of which are complete

- 61 digital phonetic transcription files

- 64 digital social data files

For further details of the NECTE project, see Allen et al. (2007) or the NECTE website.

1.2 The Phonological Variation and Change Sub-Corpus

The Phonological Variation and Change in Contemporary Spoken English (PVC) project was funded by the Economic and Social Research Council. The award holders were Lesley Milroy, Jim Milroy and Gerry Docherty and contributors to the project were Paul Foulkes, Sue Hartley, David Walshaw, Dom Watt and Penny Oxley. They selected a judgement sample, balanced according to age, gender and social class, and the fieldworker stayed in the background whilst pairs of informants who were well known to each other (friends, siblings or partners) conversed freely. Eighteen dyadic conversations, each of around 60 minutes' duration, were recorded onto DAT, so no digitisation or enhancement was required in this case.

1.3 What is NECTE?

With a gap of almost 30 years between the collection of the PVC and the TLS data, combining these two resources into the NECTE corpus allows the possibility of observing linguistic and social change on Tyneside in 'real time'. Our overarching aim was to produce a web-based electronic resource of Tyneside English containing aligned speech and text samples from individuals born between 1890 and 1970.

NECTE amalgamates the TLS and PVC materials into a single Text Encoding Initiative-conformant XML-encoded corpus and makes them available in a variety of aligned formats:

- digitized audio

- standard orthographic transcription

- phonetic transcription

- POS-tagged

2. Data representation: Orthographic transcription

The audio content of the TLS and PVC corpora has been transcribed into British English orthographic representation, and this is included in its entirety in the NECTE corpus. Two problems were encountered and, therefore, had to be resolved in order to create this representation:

- application of English spelling to non-standard spoken English

- adaptation of existing POS-tagging software from UCREL so as to accommodate non-standard English

Tagliamonte (2007) summarizes the issues to be borne in mind when tackling these two problems and thus provides a model for effectively transcribing vernacular corpora. In the initial stages of the NECTE project, this model was adapted, but it proved in the end not to be entirely suitable for our purposes given our rather different goals, though certain aspects of the Tagliamonte Protocol were retained, particularly with respect to its major principles summarized below:

- phonological processes are typically not represented

- morphological alternations that are phonologically marked are orthographically represented

- local dialect lexis and slang that does not appear in dictionaries is recorded consistently

2.1 NECTE OTP

Tyneside spoken English differs significantly from RP and other varieties of spoken English. For example, know is typically pronounced / na: /, especially in the tag you know. In popular dialect literature, this would be spelled <knaa> as can be seen in the extract from the Viz comic in image 2. Some lexical items in Tyneside English have no agreed 'standard' spelling, since they do not exist in Standard English: examples found in the NECTE corpus include boody 'broken china', knooled 'kept down / hen-pecked' and palatic 'drunk'. The following principles were adopted in constructing the NECTE OTP:

- Where the segments are phonetic variants of ones for which a Standard English spelling does exist, that spelling is used, thus / na: / is spelt <know>. This policy was adopted because NECTE provides sound files, so indications of accent or informality are not needed in the orthographic transcription. [1] It was also a principled decision, given the unreliability of semi-phonetic spelling and the views expressed in, e.g. Preston (1985), (2000), Macaulay (1991), Kirk (1997), Cameron (2001) and summarised in Beal (2005).

- Where the variant is morphologically, or morpho-phonemically determined, a variant spelling was used. Thus / mI / for SE my was spelt <me> and the Tyneside negative of do as / dIvnt / was spelt <divn't>.

- Where the segments are genuinely dialectal and occur in either of the dialect dictionaries of Heslop (1892) and/or Wright (1896-1905), then the dictionary spelling was used. In some cases, there was variation between dictionaries. The word / nu:ld / 'henpecked' was spelt both <nooled> and <knooled>, as can be seen in the extract from Wright reproduced as image 3. We eventually chose the latter because this is given primacy in Wright.

- If a lexical item was not found in dialect dictionaries, a spelling was agreed on and recorded in a lexical database which can be found on the NECTE website appendix 2.

2.2 'Cockroaches' and other 'pesky critters'

Since the NECTE corpus had actually begun to evolve prior to our very welcome injection of cash from the AHRC in order to facilitate the enhancement programme we described earlier, our transcription goals prior to this time had been very close to those of Tagliamonte — in fact we had collaborated very closely with her. As such, we used standard orthography and punctuation to enhance readability and ease of transcription. We also created special symbols for various morpho-syntactic features that Beal and Corrigan were planning to subsequently analyse in our research (see Beal & Corrigan 2002, 2005a, 2005b). Thus, relative clause markers (including zero relatives) were marked up in the text so as to distinguish e.g. relative that from complementizer that and to record unmarked relatives so that this could be done while transcribing when the context was most easily accessible. Our OTP also included abbreviations for speaker turns, hesitations and false starts and we created special symbols for laughter and other vocal noises. Indeed, special codes were even created for silence and for any utterance which for various reasons was deemed to be uninterpretable. Some samples of what we used are as follows:

- Interruptions and overlap:

- False starts

- whole word= -- e.g. if--if you want to have fun--fun

- partial word= - e.g. i-if you want to have f-fun

- Pauses

- Long= ...(N (approx. length in seconds))

- Medium= ...

- Short= ..

- Punctuation:

- Beginnings and ends of sentences, interrogatives, first person pronouns etc. are marked by standard English orthography.

- Vocal noises:

- Laughter= @

- Cough= †

- Sigh= §

- Tut= ™

- Creaky voice= hh

- Transcriber's perspective:

- Researcher's comment= (())

- Uncertain hearing= <XX> (transcriber may wish to indicate possible representations)

- Indecipherable syllable= <X>

- Zero Relative Pronoun:

We use the convention RPØ in the head of a relative clause to indicate the fact that a relative pronoun is absent and divide the relative clause from the clause that it relates to using brackets: she knew people [RPØ was with them]

Given that the task of automatically tagging NECTE — as a vernacular corpus — was already extremely complex, as we shall see in the next section, almost all of these special transcription procedures (or 'cockroaches and pesky critters' as Hermann Moisl, the computational linguist on our project, dubbed them) had to be manually removed prior to releasing the corpus to UCREL for the tagging process. They would have taken too long to convert to TEI-conformant XML, and would also have over-complicated the CLAWS process unnecessarily. We hope, at a future date, to reinstate these valuable linguistic and paralinguistic features using TEI-conformant XML.

3. Tagging software and its adaptation to the challenges of NECTE

3.1 Background: CLAWS and Template Tagger

To explain the approach adopted to POS-tagging NECTE, we provide here some background on the design of the UCREL tagging system, including recent developments.

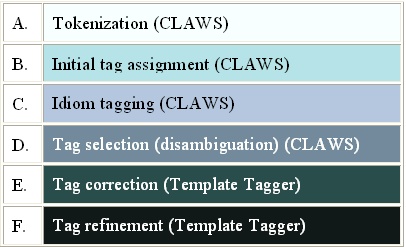

Since the mid-1990s, the UCREL team have been using two programs in tandem to assign POS-tags to English language texts: CLAWS, and Template Tagger (see Garside & Smith 1997 and Fligelstone et al. 1997 respectively). [2] The following is an outline of the tagging process, showing the division of labour between the programs:

Figure 1. UCREL POS-tagging schema.

Step A: Tokenization divides up the text or corpus to be tagged into individual (1) word tokens and (2) orthographic sentences.

Step B: Initial tag assignment assigns to each word token one or more candidate POS-tags. For example, the token love can be tagged as a singular common noun (NN1), a base form verb (VVB), or an infinitive (VVI). This process uses either (a) a lexicon listing word types, together with a set of potential tags; or, if the word is not listed in the lexicon, (b) procedures for 'guessing' its potential POS-tags on the basis of morphological features.

Step C: Idiom tagging is a matching procedure which operates on lists of patterns which might loosely be termed "idioms". Among these are:

- a list of multi-words such as because of, so long as and of course

- a list of place name expressions (e.g. Mount X, where X is some word beginning with a capital letter)

- a list of personal name expressions (e.g. Dr. (X) Y, where X and Y are words beginning with a capital letter)

- a list of foreign or classical language expressions used in English (e.g. en masse, hoi polloi)

Step D: Tag selection (or disambiguation) chooses the most probable tag from any ambiguous set of tags associated with a word token by tag assignment. This stage uses a probabilistic method of disambiguation, based on (a) the default likelihood value of each possible tag for each word (see Step B), and (b) the probability of each tag in the context of the tags assigned to surrounding words (called "tag transition probabilities").

Step E: Tag correction is one of the two main functions of the Template Tagger program. Template Tagging is like Idiom tagging in CLAWS, but with much more advanced pattern-matching. It uses linguistic knowledge, expressed in the form of hand-written template rules, to eliminate many of the errors introduced (or left unresolved) by CLAWS. The expressivity of the rules can be seen in the following example: it changes the tag on words like after or before from conjunction (CS) to preposition (II), if they are not followed by a finite verb within a window of 16 words. [3]

#AFTER [CS^II] II, ([!#FINITE_VB])16, #PUNC1

Step F: Tag refinement the other main function of Template Tagger, has been implemented only recently. Using additional hand-crafted rules, the software makes certain POS-tags in the tagged output more discriminatory. It distinguishes, for example:

- between auxiliary and lexical uses of be, do and have [4]

- between complementizer and relativizer uses of that

- between relative and interrogative uses of the pronouns which, who, whom and whose

Thus, the set of tags — or "tagset" — applied to corpus data has been expanded to make it more useful for subsequent linguistic analysis. We refer to this new tagset as "C8", since it is an incremental refinement of a previous tagset, called "C7". [5] The C8 tagset is listed on the UCREL website.

Further adjustments were made to the tagging system in a project designed to enhance the tagging of the British National Corpus. These efforts addressed all areas of the tagging system, but were most successful in phases B, D and E of Figure 1 above. Because the corpus contains 5 million words of informal speech, and 5 million of formal speech, special resources were created for processing spoken data: a new lexicon and new data on tag transition probabilities (for Step D) were created, derived from the spoken part of the BNC-sampler, a hand-corrected subset of the BNC. [6]

3.2 POS-tagging enhancements for NECTE

Because NECTE is a much smaller and dialectally more homogeneous corpus than the spoken part of the BNC, it offers greater scope for identifying specific tagging problems, and in turn developing appropriate strategies for dealing with them. Most instances of special lexical and grammatical features of Tyneside dialect were flagged up by the team in Newcastle in advance of tagging at Lancaster. Both sites identified further problematic cases through random sampling of the POS-tagged data. However, financial and time constraints on the project precluded a full manual check and correction of the tagging of the entire corpus, and the current release of the corpus is by no means error-free.

In principle, revisions could be made to any of the component phases A to F in Figure 1 to improve the POS-tagging of NECTE. In practice, UCREL's approach to tuning its tagging software to NECTE has been to focus on those areas of the system that contributed most to error reduction in its tagging of the BNC, namely, expansion of the lexicon, and rule-based disambiguation with Template Tagger. There were at least two other areas of the tagging system that might ultimately yield further improvements, but which were not adjusted. These were in respect of the probabilistic elements in CLAWS, involving use of NECTE itself:

- to derive a probability for each candidate tag in the lexicon

- to retrain the matrix of tag transition probabilities that CLAWS used for tag disambiguation (Step D above)

These two elements were developed to good effect on the BNC (see Smith & McEnery 2000). The hand-edited BNC-sampler corpus, containing two million words from a range of spoken and written genres, yielded 'gold-standard' training data for tagging the rest of the corpus. In the case of NECTE, however, no comparable training data (either from NECTE itself or an analogous dataset containing Tyneside dialect) was available.

We therefore used tag probabilities, and tag transition probabilities, derived from the spoken section of the BNC-sampler.

3.2.1 Lexical items

Most single-word lexical items were handled straightforwardly by adding entries into the CLAWS lexicon. Examples include those mentioned above — boody (entered as a noun), knooled (adjective) and palatic (adjective) — as well as the following:

nouns (tagged NN1):

tuggy (= a children's street game); mackem (= a person from Sunderland)

verbs (tagged VV0):

gan (= equivalent to go); kep (= equivalent to catch)

adjectives (tagged JJ):

cush (meaning 'great')

pronouns:

wor = 'our' (= possessive form of personal pronoun); tagged APPGE

mesel, hisself, theirself, theirselves, etc. (=reflexive personal pronoun); tagged PPX1 or PPX2

auxiliaries:

div = a regional variant of the auxiliary do, non-3rd person singular present tense; tagged VAD0. Example (1) contains div in its typical usage with a negative enclitic.

(1) i mean people divn't eat rabbit now do they (pvc18)

If a word was flagged as dialectal but it already had an entry in the standard CLAWS lexicon, with a different set of potential POS-tags, we allowed the dialectal entry to take precedence. For instance, bray has a much higher likelihood of being a verb in Tyneside English than in Standard English. The replacement lexicon entry for bray reflects this with an '@' symbol (indicating < 0.1 probability of occurrence) on the common noun and proper noun tags, but not the verb tag:

bray VV0 NN1@ NP1@

Sometimes a single lexical item is represented as a string of words, e.g. in a canny few, three words function together as a determiner/pronoun:

(2) <u who="informantTlsg15"> but here oh there's a canny few like they've got to go to <pause/> beacon lough for a school from here the older ones (tlsg15)

Similar examples include for an instance (a multi-word adverb), and jack shine the lantern (a multi-word noun). Most such multi-word expressions are grammatically unambiguous — they allow one tag only — and can be caught by additions to the CLAWS idiom list. However, sequences such as and that require disambiguation. In (3) and that is a multi-word adverb, whereas in (4) each word has a regular grammatical use — and as a conjunction, that as a determiner-pronoun (i.e. a determiner used pronominally).

(3) but with me being a girl i was very eh watchful and that but oh yes i used to <anchor id="tlsg16necteortho0600"/>wish i'd had a better type of house and things like that (tlsg16) [7]

(4) i don't i don't think there's any good prospects for anybody up here and that 's what i've al that's what i said in the first place (tlsg04)

Hence, they call for more context-sensitive rules such as:

and RR21, that RR22, [#PUNC/#SUBJECT_PNN]

i.e. tag and that as a two-part adverb if it is followed by any form of punctuation, or a subject pronoun (which is likely to indicate the start of a new clause).

3.2.2 Interjections, discourse markers and unclassifiable items

As with many taggers, CLAWS (or rather, the C8 tagset) does not have a specific tag to represent discourse markers. This is partly because of the software's long-standing application to Standard (written) English texts, where discourse markers are comparatively rare; and also because discourse markers do not constitute a discrete, easily recognizable category, particularly in the domain of automatic tagging. We have attempted to capture uses of items that are more discoursal with an existing C8 tag UH, which originally represented interjections only.

Particularly frequent forms with this tag in the Tyneside data include: wey, like, aye, ah, well, uhhuh, huh, the first four of which appear in the following excerpt:

(5)

<u who="informant">but they're not ha- not half as kind as the <pause/> <event desc="interruption"/> village people </u>

<u who="interviewer"> ah <pause/> as what they were at home like aye </u>

<u who="informant">no wey no I mean <pause /> if anybody was ill there they were in there they would bring the food in for them and

(tlsg33)

To reflect the strong bias in NECTE towards discoursal use of items such as like, numerous additional template rules — in addition to those applied in the spoken BNC — have been written, for example: [8]

not, really, like UH, no

and, then, like UH, [VV*]

you UH21, know [!VVI] UH22, like UH, [C*]

The last rule reflects the fact that like readily co-occurs with other discourse markers, including multi-word expressions such as you know. In this case the fragment [!VVI] ('not an infinitive') blocks you know from being treated as a discourse marker if CLAWS has already identified know as an infinitive, as typically happens in the context of questions formed with do, e.g. Do you know...?

Other multi-word expressions in this category include: I mean and dear me how.

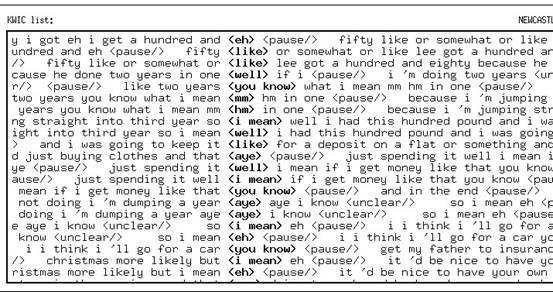

We illustrate the richness of items in NECTE tagged in this way with the following screen image of a concordance search for UH:

Figure 2. Screen image of a concordance search in NECTE for tag UH, representing interjections and discourse markers.

One further tag to mention in this context is the so-called "unclassifiable" tag, FU. This was reserved for truncated, or partially-uttered, words (see above, on 'pesky critters'). They frequently occur in false starts, for example: [9]

(6) i don't think we <w type=FU> da </w> we dare say half what the children say now (tlsg26)

(7) oh no because my kids <w type=FU> comp </w> well deborah complains about being cold (pvc03)

3.2.3 Other non-standard grammatical forms

We have already mentioned some Tyneside grammatical forms that are unambiguous, e.g. mesel, wor and div. Here we discuss forms that are in use in Standard English as well, but are liable to have a different grammatical reading according to context. Although they are particularly prevalent in Tyneside dialect, some are more general features of non-standard varieties of English.

Example (8) illustrates went functioning as a past participle rather than a past tense marker. In (9) give is used in place of past tense gave. In (10), the normally subjective form we is used in object function after put.

(8) Well if I'd went to night-schools and all that <anchor id="tlsg29necteortho0340"/> carried on at school wey i would have ... (tlsg29)

(9) oh it was funny <anchor id="pvc05necteortho2160"/> it was good though and then well they give us the flat because we had two girls (pvc05)

(10) my mam both put we into swimming when we were younger we both left about the same age (pvc06)

Such examples present varying degrees of difficulty for automatic disambiguation. Irregular past participles, as in (8) above, are generally caught as long as an auxiliary can be detected in the left context. The following template rule would match such an example:

#HAVE/'s/'d [V*], ([#ADV/XX])4, #TYNESIDE_PASTPART [VVD] VVN

Interpretation: in the context of the pattern:

- any form of have, or contracted form 's or 'd , that has been tagged by CLAWS as a verb,

- followed by up to four words tagged as adverb or negative particle,

- followed by any listed irregular dialect form that may function as a past participle — but which has in fact been tagged by CLAWS as a past tense verb

→ change the last past tense verb into a past participle

The second and third types of example are much trickier to fix. It may be possible to identify the use of give and other forms (e.g. come, seen, done) as past tense markers in certain contexts, such as 'preceded by a third-person singular subject pronoun', 'not preceded by an auxiliary form of have or form of be' or 'followed by a past tense form', as in e.g.:

(12) no stupid ali man he give the walkman to ehm parmi and decided to eh <pause/> want it back straight away

(13) i seen what's her name this morning

However, few examples in the corpus provide such explicit clues, and even then the clues may not be wholly reliable. For this reason, we have not yet been able to tag non-standard past tense forms automatically.

Another distinction which has remained unmarked is objective use of pronoun we. To recognize such uses reliably, the tagger would presumably need to know — as a minimum — the argument structure of the verb (to determine whether the verb is being used transitively) and the semantics of the verb (e.g. to identify verbs of saying and thinking — after which we is more likely to be in subject function). Template Tagger currently has only rudimentary knowledge of the latter, and no knowledge of the former. [10]

For these less tractable types of disambiguation, the most practical solution is probably to locate the phenomena by concordance queries, and correct the relevant POS-tags by hand. The corrections can be made available in a future release of the corpus.

3.3 Lemmatization

The grammatically-annotated release of NECTE contains, in addition to POS-tagging, a lemma for every orthographic word. A lemma is like a dictionary headword, grouping a set of inflectional variants (e.g. see, sees, saw, seeing) under the basic, uninflected form (in this case, see). These forms share the same part-of-speech category (in this case, verb).

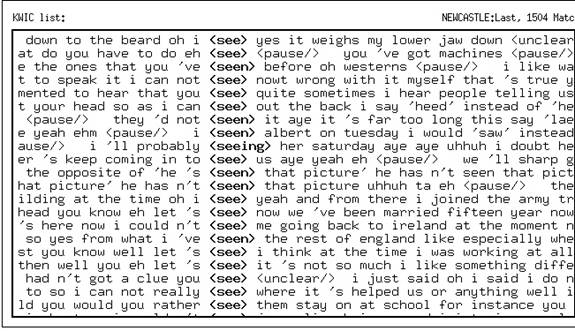

Lemmatization allows users of the corpus to make lexico-grammatical searches more efficient (because the variants do not need to be found separately), and more discriminatory (because lemmas are part-of-speech based). Figure 3 illustrates a concordance search of NECTE based on the verb lemma SEE.

Figure 3. Concordance based on a search for lemma SEE in NECTE.

The lemmatization rules employed in NECTE originally derive from Beale (1987), but have been considerably revised in the light of lemmatization carried out on the BNC-World edition.

4. Conclusion

We have outlined some of the issues — and our preferred solutions — in transcribing and grammatically tagging a corpus of vernacular English with strong dialectal features.

Tagliamonte (2007: 205) summarises the transcription process for large dialectal corpora as striking a balance between 'consistency, trade-offs and thinking-ahead'. The creation of the NECTE corpus is an exemplar of exactly these issues. Since its development was protracted, coupled with the fact that the original data sources were collected by researchers with rather different objectives from each other (and from those of the NECTE team), 'thinking ahead' in Tagliamonte's sense was impossible in certain respects. In others, however, NECTE goes well beyond the kind of dialect corpus transcription that Tagliamonte is referring to in that it has been purpose-built to appeal to the widest possible range of end-users and with longevity in mind. Naturally, this has entailed much effort to achieve the highest levels of 'consistency', but it has also meant numerous 'trade-offs' in the transcription process to accommodate TEI conformance and to allow for the unique enrichment of such a resource which the UCREL POS-tagging has achieved.

Although the UCREL tagging software had not previously been exposed to large quantities of dialect data, it has been possible to adapt the resources of the two programs, CLAWS and Template Tagger, to handle the special features of Tyneside dialect. UCREL's efforts have concentrated on the CLAWS lexicon and pattern-matching rules in Template Tagger, which can capture some — although not all — of the non-standard features of this variety. The additional categories provided for in the C8 tagset facilitate many kinds of study on grammatical (or lexico-grammatical) variation and change. At the same time, as with the transcription protocol, trade-offs have had to be made. For example, some non-standard uses of verbs and pronouns in Tyneside English could not be identified automatically, because of limitations on linguistic knowledge (e.g. about transitivity) currently incorporated into the software.

The POS-tagging in the present release version of NECTE is not set in stone. We are currently looking at ways of systematically locating and eliminating tagging errors, short of manually editing the entire corpus. A useful precedent in this respect is Leech & Smith's (2000) survey of BNC tagged data, which highlighted the most error-prone tagging decisions in stratified samples taken from the corpus. [11]

Further, our experience in dealing with the numerous features of non-standard lexis and grammar in NECTE, suggests that the methods presented here can readily be transferred to tagging other dialects of English.

Acknowledgments

We are grateful to Anneli Meurman-Solin for her advice and encouragement and to the audience at the ICAME-25 conference in Helsinki, in May 2006 where these ideas were first presented. We would like to express our particular thanks to Geoffrey Leech, who delivered the POS-annotation part of this paper at the conference. He has played a major role in pioneering UCREL's linguistic annotation software, providing an excellent platform for the development work described in this paper.

Notes

[1] Should you wish to hear a sound file from this corpus, please visit

http://research.ncl.ac.uk/necte/corpus.htm where you will find a 'download

request form' which, when approved, will allow you access to all the text and sound files generated by this enhancement project.

[2] On the development of CLAWS up to the mid-1990s, see Marshall (1983) and Leech et al. (1994).

[3] Key: The two commas separate this rule into three portions. Square brackets surround tags in the input to Template Tagger. '#' indicates a class of words or tags defined in a separate file, e.g. #FINITE_VB lists all POS-tags that can be considered finite verbs, and #PUNC1 lists 'hard' punctuation: ./?/!/; ).

[4] As an example, the rule:

[VBZ] VABZ, ([#INTERMED_ADV])4, [V*G]

adjusts the tag VBZ (3rd singular of verb BE) to an auxiliary (VABZ) if it is followed by a present participle (V*G). Up to four adverbial tags (e.g. adverbs, negatives) can intervene between BE and the participle.

[5] C8 was introduced for the first time in the (re)tagging of the Brown family of corpora, that is Frown and F-LOB, Brown and LOB (see Hinrichs & Smith, forthcoming). C7 is the tagset (containing 135 tags) used for the BNC-sampler corpus, while a more basic tagset called C5 (consisting of 57 tags) was used in the 100-million-word corpus. These tagsets are essentially based on the same principles of categorization: the difference between them is in the degree of granularity that is applied.

[6] Details of other adjustments made to CLAWS to handle spoken texts can be found in Garside (1995).

[7] <anchor id=..."> identifies the position of an overlap in the recording.

[8] Users wishing to compare NECTE with the spoken BNC should note that in the latter corpus an even broader-brush approach was taken towards tagging words capable of functioning as discourse markers. The tagging software was adjusted to apply the tag AV0 ('general adverb') for words such as well, right and like, in effect blurring the distinction between discoursal and ordinary lexical use of these items.

[9] In the BNC, the tag UNC, designating unclassified tags were used not only for truncated items but also pause fillers. See Leech & Smith (2000) at http://ucrel.lancs.ac.uk/bnc2/bnc2guide.htm.

[10] These last two limitations also apply to spoken data in the BNC.

[11] See http://ucrel.lancs.ac.uk/bnc2/bnc2error.htm.

Sources

Newcastle Electronic Corpus of Tyneside English (NECTE),

http://research.ncl.ac.uk/necte//

Text Encoding Initiative (TEI),

http://www.tei-c.org/index.xml

University Centre for Computer Corpus Research on Language,

http://www.comp.lancs.ac.uk/ucrel/

Extensible Markup Language (XML),

http://www.w3.org/XML/

References

Allen, W. H. A., J. C. Beal, K. P. Corrigan, W. Maguire & H. L. Moisl. 2007. "A linguistic time capsule: The Newcastle Electronic Corpus of Tyneside English". In Beal, Corrigan & Moisl (eds.) 2007b, 16-48.

Beal, J. C. 2005. "Dialect representation in texts". The Encyclopedia of Language and Linguistics, 2nd ed., 531-538. Amsterdam & London: Elsevier.

Beal, J. C. & K. P. Corrigan. 2002. "Relativisation in Tyneside English". Relativisation on the North Sea Littoral, ed. by Patricia Poussa, 33-56. Munich: Lincom Europa.

Beal, J. C. & K. P. Corrigan. 2005a. "No, Nay, Never: Negation in Tyneside English". Aspects of English Negation, ed. by Yoko Iyeiri, 139-156. Tokyo: Yushodo Press & Amsterdam: John Benjamins.

Beal, J. C. & K. P. Corrigan. 2005b. "A tale of two dialects: Relativization in Newcastle and Sheffield". Dialects Across Borders: Selected papers from the 11th International Conference on Methods in Dialectology (Methods XI), Joensuu, August 2002, ed. by Markku Filppula, Juhani Klemola, Marjatta Palander & Esa Penttilä, 211-229. (Current Issues in Linguistic Theory 273). Amsterdam: John Benjamins.

Beal, J. C., K. P. Corrigan & H. L. Moisl (eds.). 2007a. Creating and Digitizing Language Corpora, Volume 1: Synchronic Corpora. Basingstoke: Palgrave Macmillan.

Beal, J. C., K. P. Corrigan & H. L. Moisl (eds.). 2007b. Creating and Digitizing Language Corpora, Volume 2: Diachronic Corpora. Basingstoke: Palgrave Macmillan.

Beale, Adam D. 1987. "Towards a distributional lexicon". The Computational Analysis of English: A Corpus-based Approach, ed. by Roger Garside, Geoffrey Leech & Geoffrey Sampson, 149-162. London: Longman.

Cameron, Deborah. 2001. Working with Spoken Discourse. London: Sage.

Fligelstone, Steve, Mike Pacey & Paul Rayson. 1997. "How to generalize the task of annotation". In Garside, Leech & McEnery (eds.), 122-136.

Garside, Roger. 1995. "Grammatical tagging of the spoken part of the British National Corpus: A progress report". Spoken English on Computer: Transcription, Mark-up and Application, ed. by Geoffrey Leech, Greg Myers & Jenny Thomas, 161-167. London: Longman.

Garside, Roger, Geoffrey Leech & Anthony McEnery (eds.). 1997. Corpus Annotation: Linguistic Information from Computer Text Corpora. London: Longman.

Garside, Roger & Nicholas Smith. 1997. "A hybrid grammatical tagger: CLAWS4". In Garside, Leech & McEnery (eds.), 102-121.

Heslop, R. Oliver. 1892. Northumberland Words: A Glossary of Words Used in the County of Northumberland and on the Tyneside. London: English Dialect Society.

Hinrichs, Lars & Nicholas Smith. forthcoming. "The part-of-speech-tagged 'Brown' corpora: A manual of information, including pointers for successful use". To appear on ICAME CD-rom, 3rd edition.

Kirk, John. 1997. "Irish-English and contemporary literary writing". Focus on Ireland, ed. by Jeffrey L. Kallen, 190-205. Amsterdam: John Benjamins.

Leech, Geoffrey, Roger Garside & Michael Bryant. 1994. "CLAWS4: The tagging of the British National Corpus". Proceedings of the 15th International Conference on Computational Linguistics (COLING 94), 622-628. Japan: Kyoto.

Leech, Geoffrey & Nicholas Smith. 2000. Manual to Accompany The British National Corpus (Version 2) with Improved Word-class Tagging. On BNC-World CD-rom and http://ucrel.lancs.ac.uk/bnc2/bnc2postag_manual.htm.

Macaulay, Ronald K. S. 1991. "Coz it izny spelt when they say it: Displaying dialect in writing". American Speech 66: 280-291.

Marshall, Ian. 1983. "Choice of grammatical word-class without global syntactic analysis: Tagging words in the LOB corpus". Computers and the Humanities 17: 139-150.

Moisl, Hermann L. & Warren Maguire. 2008. "Identifying the main determinants of phonetic variation in the Newcastle Electronic Corpus of Tyneside English". Journal of Quantitative Linguistics 2008: 15, to appear.

Preston, Dennis R. 1985. "The Li'l Abner syndrome: Written representations of speech". American Speech 60(4): 328-336.

Preston, Dennis R. 2000. "Mowr and mowr bayud spellin: Confessions of a sociolinguist". Journal of Sociolinguistics 4(4): 614-621.

Smith, Nicholas. 1997. "Improving a tagger". In Garside, Leech & McEnery (eds.), 137-150.

Smith, Nicholas & Anthony McEnery. 2000. "Can we improve part-of-speech tagging by inducing probabilistic part-of-speech annotated lexicons from large corpora?"Recent Advances in Natural Language Processing II: Selected Papers from RANLP ' 97, ed. by Nicolas Nicolov & Ruslan Mitkov, 21-29. (Current Issues in Linguistic Theory 189). Amsterdam: John Benjamins.

Tagliamonte, Sali. 2007. "Consistency, trade-offs and thinking ahead". In Beal, Corrigan & Moisl (eds.) 2007a, 205-240.

Wright, Joseph. 1896-1905. The English Dialect Dictionary. London: Kegan Paul for the English Dialect Society.

|