New corpora from the web: making web text more 'text-like'

Andrew Kehoe & Matt Gee

Research and Development Unit for English Studies,

Birmingham City University

In this paper we discuss

the first stages in the development of the WebCorp Linguist's Search

Engine. This tool makes the web more useful as a resource for

linguistic analysis by enabling users to search it

as a corpus on a vast scale. We report on how the Search Engine

has been designed to overcome the limitations of our existing WebCorp

system by bypassing commercial search engines and building web corpora

of known size and composition. We examine in detail the nature

of text on the web, beginning with a discussion of HTML format and the

development of tools to extract the main textual content from HTML files

whilst maintaining sentence and paragraph boundaries. We move

on to look at other file formats, such as PDF and Microsoft Word, in

an attempt to ascertain whether these offer

the linguist different kinds of textual

content for the building of corpora.

The potential value of the

web as a source of linguistic data has been well documented in recent

years, since linguists began attempting to extract examples of usage

from it using commercial search engines in the mid-1990s (e.g. Bergh et al. 1998).

At that time, the Research and Development Unit

for English Studies (RDUES) began developing the prototype of its WebCorp

system, an online tool designed to automate the process of treating the

web as a corpus. WebCorp takes a word or phrase and other parameters

from the user, passes these to a commercial search engine (Google, AltaVista,

etc), then extracts the 'hit' URLs from the search engine results

page. Each URL is accessed and processed and the extracted concordances

are presented in one of a choice of formats. [1]

WebCorp's reliance on commercial

search engines as gatekeepers to the web has been its Achilles' heel.

The tool attempts to treat the web as a corpus but the lack of direct

access to this 'corpus' means that all processing must take place

in real time. Whilst this is quicker than the equivalent manual

process (as used by Bergh et al. 1998), it is still somewhat slow, a

problem exacerbated by increased use of the WebCorp prototype and associated

server load. With little scope for pre-processing of web texts,

WebCorp cannot offer functionality such as grammatical search or complex

pattern matching and, perhaps most importantly, cannot provide the linguist

with any reliable statistical information. Quantitative studies are

not possible with the WebCorp approach as the total size of the 'corpus'

is not known or even fixed. [2]

Furthermore, the composition

of the web 'corpus' is not known. The web lacks reliable information

on publication date (Kehoe 2006), language and author. Pages

sit side-by-side that have been written at different times; in different

languages, character sets and file formats; for different purposes and

audiences; by authors of different ages with different levels of competence.

The restrictions on publication and quality control of printed media

are largely absent on the web.

As we have outlined, WebCorp

attempts to treat the web itself as a corpus. Meanwhile, other

linguists have instead used the web as a source of texts to build smaller

corpora (Cavaglia & Kilgarriff 2001; Ghani et al. 2003;

Baroni

& Bernardini 2004). This 'bootstrapping' approach to

the building of corpora from the web was automated in the BootCaT toolkit

(Baroni & Bernardini 2004), which has been used subsequently by

Sharoff (2006) to build a general (BNC-like) corpus from the web. [3]

While many researchers draw

a distinction between the two approaches: on the one hand, the web

itself as a corpus (WebCorp, KWiCFinder) and, on the other, the

web as a source of corpus data, we do not see the two as mutually exclusive.

In the following section, we detail our solution, which addresses the

limitations of both approaches.

When development of the WebCorp

prototype began in 1998, it was clear that the long-term solution would

be to develop a large-scale web search engine to give direct access

to the textual content of the web, thus bypassing the restrictions imposed

by a reliance on commercial search engines.

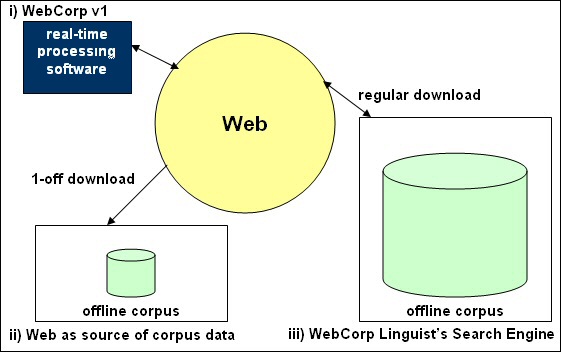

It is in the development of

such a search engine that the two hitherto distinct 'web as corpus'

approaches intertwine, as shown in Figure 1.

Figure 1. 'Web as corpus' approaches.

Given sufficient processing

power and, more importantly, disk storage, a new option becomes possible

- (iii) where the web is used as a source of data for the building

of a corpus, as in (ii), but where this corpus is sufficiently large

and regularly updated that it becomes a microcosm of the web itself.

The important differences are that this web corpus is of known size

and composition and is available in its entirety for offline processing

and analysis.

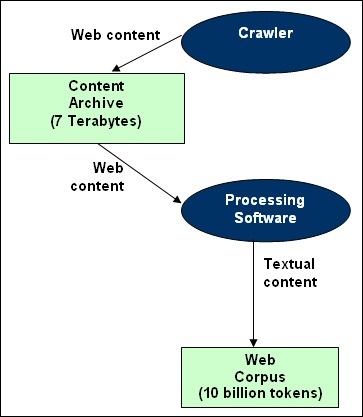

We are currently in the process

of developing the large-scale WebCorp Linguist's Search Engine.

The architecture (Figure 2) includes a web crawler (to download files

with textual content from the web and to find links to other files to

be downloaded) and linguistically-aware processing software (to prepare

the downloaded documents for corpus building). It is the latter

which we shall focus on in this paper.

Figure 2. WLSE architecture.

Our (conservative) estimate,

extrapolated from figures published in 1998 (Brin & Page 1998),

is that Google's cache of the web contains at least 1000 billion tokens

of text. The corpus created by the WebCorp Linguist's Search

Engine from web text will not contain the whole web but will instead

focus on carefully targeted subsections, filtering out content of poor

linguistic quality. [4] Our aim is to build a 10 billion

token web corpus over 2 years, consisting of:

- a series of domain specific sub-corpora, updated monthly

- newspaper sub-corpora, updated daily

- a multi-terabyte 'mini web', updated monthly

Before we could begin to construct

these (sub-)corpora, it was necessary to examine documents found on

the web and devise a definition of 'text' in a web context.

We took as our starting point that 'text' should

- contain connected prose

- be written in sentences, delimited by full-stops

- contain paragraphs

- be complete, cohesive and interpretable within itself [5]

Ide et al. (2002) specify two

criteria for connected prose, stating that it should contain at least

2000 tokens and at least 30 tokens per paragraph on average. Only

1-2% of web pages they examined met both of these criteria, though the

aim of their experiment and motivation for their selectional criteria

are explicit in the title of their paper: The American National Corpus:

More Than the Web Can Provide [6]. Similarly, Cavaglia

and Kilgarriff (2001), in their study of web text, rejected all pages

containing 'less than 2000 non-markup words'.

In order to examine, and possibly

redefine, our initial definition of 'text' on the web, we developed

software capable of processing files downloaded from the web during

the crawling phase. We have developed tools for language detection,

date detection and duplicate/similar document detection but will focus

on text 'clean-up' in this paper; that is, converting web documents

to a format ready for corpus building.

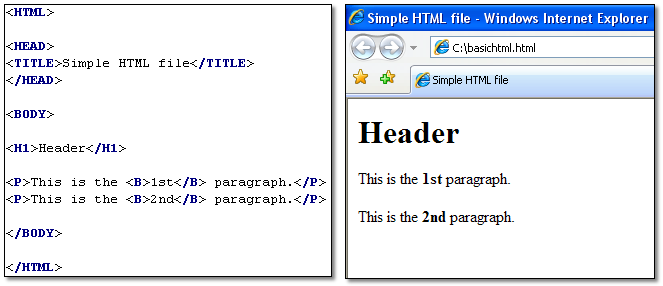

Hyper-Text Mark-up Language

(HTML) is the native format for texts on the web, so we began by developing

tools to process HTML files. At the simplest level, an HTML file contains both text

and mark-up tags, the latter

indicating how different sections of the text should be displayed on screen.

The basic example in Figure 3 illustrates the structure of an HTML file, with a <HEAD>

section at the top, followed by the main <BODY> section. Within the body, there is a

header (demarcated by <H1> tags), followed by two paragraphs (within <P> tags).

Certain text (1st and 2nd) is displayed in bold type using the <B> tag.

Figure 3. Simple HTML file (left) & as displayed in web browser (right).

In reality, however, HTML files are rarely this simple and often contain a variety

of mark-up tags from different generations of HTML, tags used in non-standard ways,

scripting languages (e.g. JavaScript), author-defined style sheets, tagging errors, etc. HTML

tags are largely presentational rather than semantic and cannot be relied upon in any

linguistic analysis of textual content. There may

be 'paragraphs' in an HTML file which do not contain any text, or text which is not contained within

paragraph tags. Furthermore, not everything within

the <BODY> tags is necessarily part of the main textual content of the file, as we shall

illustrate in the following section.

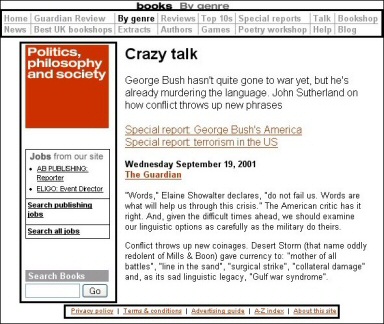

Web pages in HTML often contain

text which is within the <BODY> tags but is peripheral to the main content. This is

known as 'boilerplate' and in Figure 4 takes the form of a

menu of links in the header, job

advertisements on the left and links at the foot of the page.

Figure 4. 'Boilerplate' (marked by black boxes) is typically header, footer

and navigation information. (Click to enlarge)

These sections are formalities

of the web, required for navigation and meta-information, but very rarely

contain connected prose. They often repeat themselves within and

across pages, thus dominating corpus searches and skewing statistical

information. We therefore considered it undesirable for such text

to be included in our web corpus and needed a way to remove these sections

from HTML pages. Due to the large scale of our search

engine, it was necessary for this procedure to be automated.

We began by testing the Body

Text Extraction (BTE) program by Aidan Finn, later adapted

by Marco Baroni, which attempts to remove boilerplate text

automatically. The HTML file is treated as a set of binary entities,

either a mark-up tag or a token of text. By giving each entity

a value (-1 for a tag or 1 for text) and generating cumulative scores,

the longest stretch of text (with the highest score) is found and this

is deemed to be the main content of the page. Unfortunately, this approach

results in the loss of all formatting from the original page and, due

to the lack of reliable punctuation on the web, can leave us with poor

quality text (see the BTE output in Figure 6). This is particularly

evident for sentence boundaries, which are essential in linguistic analysis.

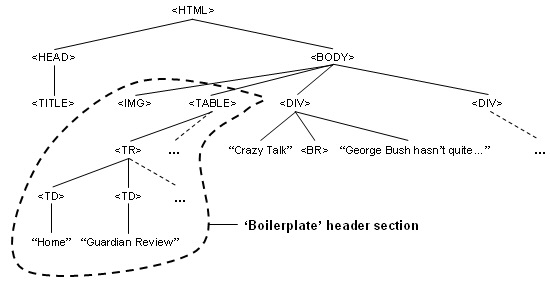

In our previous work with WebCorp,

HTML documents were represented as tree structures, the beginning of

the document being the root, with a branch for each subsection (Figure

5). (Note that this example also highlights the complexity of actual HTML documents, with

<DIV> tags used in place of the paragraph tags shown in the simple example in

Figure 3.)

Figure 5. Sections of the HTML tree for the page in Figure 4.

We have modified the tree representation

to allow boilerplate stripping. Scores are assigned to each branch

of the tree, starting from the leaf nodes, with a score of -2 for a

tag and a score equal to the token count for a chunk of text [7].

Then, by propagating scores up the tree we can find the section of the

page where the score is highest, which should contain the main content.

We then extract the text and all formatting information from these branches

of the tree, allowing a simple heuristic to detect otherwise unmarked

sentence boundaries. Figure 6 shows the improvement in text quality,

with paragraph boundaries maintained and extra full stops included after header lines.

Figure 6. Improvement in text quality through boilerplate removal and sentence boundary detection.

Figure 7 illustrates

the improvement in search output (for the term phishing) which

our tree-based boilerplate removal module provides. The unfiltered

version contains the link text from the top of the original page and

conflates the heading with the first sentence of the text. The

boilerplate module removes this spurious text and inserts a full stop

between the heading and first sentence.

Original Page (Click to enlarge)

|

Unfiltered output

Partner Login | Support Login HOW TO BUY PRODUCTS SOLUTIONS RESOURCES PARTNERS SUPPORT COMPANY GLOBAL SITES CipherTrust Product Family Anti-Phishing - Protecting Employees from Email Fraud Phishing is a form of fraud used to gain personal information for purposes of identity theft. |

Filtered output

Anti-Phishing - Protecting Employees from Email Fraud. Phishing is a form of fraud used to gain personal information for purposes of identity theft. |

Figure 7. Search output before and after boilerplate removal and sentence detection.

Most research using the web

as a corpus considers only files in HTML format (including the work

of Ide et al., Fletcher, and Baroni & Bernardini discussed previously). This is the

most accessible format on the web, in the sense that text can be extracted

from HTML files using relatively simple software tools [8].

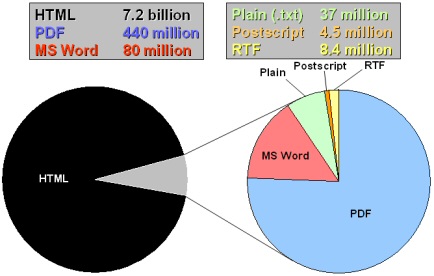

However, for several years,

Google has indexed other file formats, including Portable Document Format

(PDF), Microsoft Word (DOC) and Postscript (PS). We conducted

an experiment to discover how widespread each of these formats is on

the web, using the 'filetype' operator in Google [9], and

the results are shown in Figure 8.

Figure 8. Google Index - number of files in English in each format. (Click to enlarge)

Whilst the vast majority of

web documents are in HTML, our results show that there are significant

numbers of documents in other formats, especially PDF. We felt

it necessary to examine the kinds of text found in these other formats

to see if they differ significantly from those found in HTML.

Our intuition was that documents such as academic papers are more likely

to be found in PS or PDF format than in HTML, but we wished to examine

to what extent this is the case.

Before we could carry out the

analysis outlined above, it was necessary to run converter software

to extract the textual content from non-HTML files. We used existing

programmes wherever possible, such as the DOC format converter Antiword,

but developed a new filter for RTF documents. Onto each format

converter we built our own procedures for extracting extra information

stored with the document, such as authorship/publication date.

This experiment was designed partly to facilitate the iterative refinement

of our file format converters [10].

In order to test our hypothesis,

we carried out a large test crawl of the web, beginning at the portal

page http://www.thebigproject.co.uk/. Whilst the WebCorp Linguist's Search

Engine uses careful seeding techniques for the downloading of its specific

sub-corpora, for this experiment we wished to use a large random chunk

of the web and the 'Big Project' site was ideal.

We downloaded almost 1 million

files in our supported formats but focussed on a random subset of these

(300,000) to allow fuller manual analysis of results. After filtering

out errors ('404' messages - pages not found) and running our

boilerplate stripper on the HTML files, we were left with 106,000 files

(or 67 million tokens of text). The relative frequencies of document

formats in this test crawl mirrored those in the Google index (Figure

8).

In this experiment we wished to test whether:

- some documents or document formats on the web are more or less 'text-like' than others

- there are some documents or document formats on the web which pass through our initial filters

but would not be considered 'genuine' texts by a human reader, and, so, whether additional filters can be developed and applied.

We chose five parameters, which we consider in combination in the following section:

- document length

- sentence length

- paragraph length

- number of paragraphs

- lexis

The graphs in Figure 9 show

document length (in tokens) and average number of tokens per sentence

for each document in the test crawl [11], differentiated by

file format. Each graph shows the results for an individual file format and graphs can be navigated using the links below.

The HTML and PDF formats present the most interesting

results.

Figure 9. Document and sentence lengths in test crawl by file format. (Click 'Next' to advance)

Outlier group 1 in Figure 9 consists of four very long PDF files (between 100,000 and 200,000

tokens) which do not contain especially long sentences on average.

The longest of these files, with 192,888 tokens over 169 pages, is a

Michigan Technological University 'catalog', containing course descriptions,

staff profiles, etc. Whilst this file does contain relatively

long sections of connected prose, it also contains several lists, a

contents page and a full index, the result being that it has the second

lowest average number of tokens per sentence count (1.49) in the test

crawl.

Another of the long PDF examples

is a UK government 'Emergency Preparedness' document, which has 104,502 tokens over 232

pages and, like the previous example, contains lists as well as connected

prose. In this case, the latter is laid out in numbered paragraphs,

in the style of a legal document.

One explanation for the appearance

of several very long PDF files in our test crawl is that PDFs usually

contain a whole document, often an electronic version of a multi-page

physical document. HTML, on the other hand, does not have any concept

of 'pages' within a single document and many HTML documents are

split by their authors over several files, each representing one 'page'.

Usually there will be a 'Next' or 'More' link at the bottom

of each page, leading to the next. This is good HTML practice

and is borne out in Table 1, which shows the percentage of files in

each format containing more than 2000 tokens, Ide et al.'s minimum

token count for connected prose. Furthermore, Figure 9 shows that

none of the HTML files in our test crawl contains more than 25,000 tokens.

Table 1. Files with more than 2000 tokens.

|

File Format |

%

|

| HTML |

3.73 |

| PDF |

39.28 |

| MS

Word |

15.83 |

| Plain |

46.67 |

| PS |

100 |

| RTF |

75 |

| Overall |

4.68 |

This fact is important to bear

in mind when using the web to build corpora. A single HTML file

may not be fully 'text-like' in that it may contain only a single

sub-section of a larger document but, unlike Ide et al., we do not reject

such files. The WebCorp Linguist's Search Engine is capable

of creating a fully cohesive text by following the 'Next' or 'More'

links at the bottom of HTML files and piecing together the individual

sections [12].

Outlier group 2 in Figure 9 contains HTML files with an average sentence length

of more than 100 tokens [13]. Manual analysis reveals

that 181 of these are spam for pornographic websites, containing long

sequences of words with no punctuation [14]. Other HTML

files in this outlier group include those containing poorly written

text with no punctuation (on message boards, etc) and other 'non-text'

pages - e.g. lists, crossword puzzles. There are, however, some

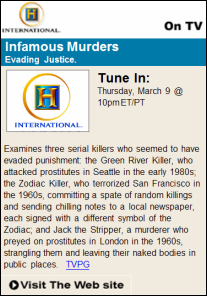

genuine texts with a high average sentence length, such as that shown

in Figure 10.

Figure 10. Genuine long sentence. (Click to enlarge)

Although this text contains

81 tokens in a single sentence, we feel that it would be considered

a 'genuine' text by a human reader. This example is also of note

in that the beginning of the sentence contains a verb (Examines)

with an ellipted subject, which refers back to the header 'Infamous

Murders Evading Justice' (the title of the television programme being

described). Since the header is separated from the rest of the

text and rendered in a different font, a basic boilerplate removal tool

may well remove it, thus leaving the extracted text incomplete and not

fully cohesive. This example alerted us to the need for further

refinements to our boilerplate stripper to account for such cases.

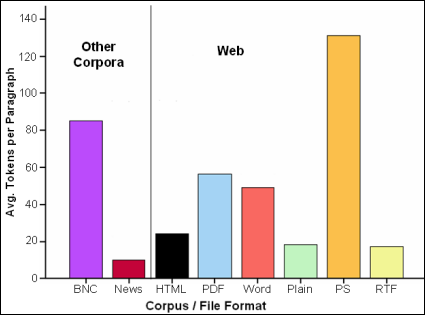

For the first part of this

test, we compared the average paragraph lengths of texts in our test

crawl with those in the British National Corpus (BNC) and in our 700

million word Independent / Guardian newspaper corpus (see

Figure 11).

Figure 11. Average paragraph length in each web format and other corpora.

The most noticeable finding

here is that the PS files in our crawl have much longer paragraphs than

all other formats on average. Manual analysis reveals that this

is a reflection of the kinds of texts stored in PS format: academic

papers, technical manuals, etc. The example shown in Figure 12

(from http://peipa.essex.ac.uk/benchmark/tutorials/essex/tutorial.ps) has 139 tokens in its first paragraph and 146 in its second.

Figure 12. Long paragraphs in a PostScript file. (Click to view file)

These paragraphs may not appear

to be particularly long to the human eye but they are long by the standards

of the web, where HTML pages such as that in Figure 13 (from http://news.bbc.co.uk/2/hi/uk_news/2080735.stm) dominate.

Figure 13. Short paragraphs in an HTML file. (Click to view file)

In this BBC news article, each

sentence is laid out on screen as a new paragraph. This is the

norm for news articles in the offline world too (as reflected in the

bar for the newspaper corpus in Figure 11), but this style of paragraphing

appears to be more widespread in HTML files of all kinds on the web.

Table 2 shows the percentage of files in each format with more than

30 tokens per paragraph on average (another of Ide et al.'s measures).

Table 2. Files with more than 30 tokens per paragraph on average. [15]

|

File Format |

%

|

| HTML |

23.02 |

| PDF |

67.71 |

| MS

Word |

82.24 |

| Plain

Text |

74.39 |

| PS |

85.71 |

| Overall |

24.26 |

As HTML files tend to have

shorter paragraphs, one could expect to find that they contain more

paragraphs overall than other formats. However, Table 3 shows

that this is not the case, and this reflects the phenomenon noted in

the previous section, whereby HTML documents are often split between

multiple files, each containing a sub-section of the text (and, thus,

a sub-set of the paragraphs).

Table 3. Average number of paragraphs for each file format.

|

File Format |

Average

|

| HTML |

31 |

| PDF |

110 |

| MS

Word |

33 |

| Plain

Text |

29 |

| PS |

80 |

| Overall |

33 |

Any analysis of paragraphs

in text requires accurate tokenisation and paragraph boundary detection.

The fact that our boilerplate remover maintains paragraph breaks in

HTML files allowed us to analyse paragraphs in our test crawl.

We are aware, however, that paragraph boundaries are less reliable in

HTML files than in files in other formats. As we have shown, whilst the HTML language

does have a paragraph tag (<P>), this is not always used solely

for that purpose and there are, in fact, several other tags which can

be used to give the visual appearance of a paragraph break [16].

Ide et al. (2002) did not remove boilerplate text, they excluded <P>

tags when parsing HTML pages and they did not split text within <PRE>

tags at all [17], so their paragraph divisions and the conclusions

they draw from them are somewhat questionable.

Previous studies have compared

word frequencies in web documents to those in corpora such as the BNC.

The results have been used as a filter to determine whether the web

documents are sufficiently 'text-like' to be included in a corpus

(Ide et al. 2002; Cavaglia & Kilgarriff 2001) or as a way of

comparing corpora built from the web to standard corpora (Fletcher 2004; Sharoff 2006).

Fletcher's study used the

top ten highest frequency (grammatical) words from the BNC to query

the AltaVista search engine and find HTML files to download. After

filtering, he was left 4949 files or 5.4 million tokens of web text.

Upon comparing word frequency ranks from this web corpus with those

from the BNC, Fletcher found higher ranks in the former for words such

as you, will, we, information, our,

site, page, university, data, search,

please and file.

Following Rayson & Garside

(2000), we used the log-likelihood statistic rather than frequency ranks

to compare word lists from our web corpus with those from the BNC [18].

Sharoff (2006) used the same statistic to compare his web corpus (HTML

files only) with the BNC but we take the experiment further by examining

separate word lists for each of the file formats in our web corpus.

The full set of results can be found at http://rdues.bcu.ac.uk/llstats/ and we discuss the most significant

findings here.

Table 4. Web V BNC - words overused on the web.

|

Word |

L-L |

|

Word |

L-L |

| 2005 |

131078.56 |

|

Windows |

33863.82 |

| 2006 |

125090.10 |

|

Service |

33015.02 |

| your |

103000.86 |

|

website |

31567.14 |

| information |

81698.92 |

|

link |

31413.66 |

| page |

74404.35 |

|

Continue |

30379.89 |

| site |

67103.63 |

|

software |

29760.66 |

| Web |

63768.45 |

|

security |

29751.72 |

| Internet |

61882.93 |

|

web |

29581.89 |

| you |

56994.24 |

|

More |

29388.02 |

| online |

55289.54 |

|

URL |

28781.96 |

| email |

53343.66 |

|

Click |

28447.00 |

| 2004 |

51341.10 |

|

Mar |

27958.54 |

| or |

47322.75 |

|

Yahoo |

27449.70 |

| Security |

47230.26 |

|

users |

27170.18 |

| Posted |

44084.59 |

|

Search |

26375.35 |

| click |

38965.34 |

|

Google |

25897.49 |

| 2003 |

37180.42 |

|

data |

25517.43 |

| Reading |

36450.68 |

|

Linux |

24632.92 |

| Microsoft |

35173.24 |

|

user |

24401.86 |

| U.S |

33874.40 |

|

vulnerability |

24244.80 |

In log-likelihood statistics,

'overused' refers to words with a higher relative frequency in one

corpus compared with the other, without the negative connotations the

term usually carries. Table 4 shows the words overused on the web (all

our supported file formats combined) when compared with the BNC and,

thus, also shows the words underused in the BNC when compared

with the web. Here we see the time dimension coming into play,

explicitly and unsurprisingly in the high log-likelihood scores for

the years 2003, 2004, 2005 and 2006, but

also in the occurrence of words referring to modern technology and the

infrastructure of the web itself. The fact that all years prior

to 2003 appear much lower down the list reflects the bias toward newness

which exists on the web and which we have noted previously (Kehoe 2006).

Fletcher (2004) concludes

that 'the BNC data show a distinct tendency toward third person, past

tense, and narrative style, while the Web corpus prefers first (especially

we) and second person, present and future tense, and interactive

style', but our results do not correlate fully with this. We

do see overuse of the second person (you, your) on the web but Table 5

shows that the first person I is,

in fact, overused in the BNC (underused on the web). This can

be explained by the fact that Fletcher considered only HTML files, whereas

we included other formats (see following section).

Table 5. Web V BNC - words overused in the BNC.

|

Word |

L-L |

|

Word |

L-L |

| was |

252336.80 |

|

been |

23813.34 |

| had |

159984.53 |

|

Mr |

22033.56 |

| the |

154330.97 |

|

It |

21565.92 |

| he |

140934.16 |

|

a |

21428.39 |

| her |

127530.25 |

|

as |

21406.91 |

| his |

122142.08 |

|

said |

20877.00 |

| she |

97106.03 |

|

they |

19698.53 |

| were |

87946.84 |

|

me |

18901.10 |

| of |

72774.69 |

|

But |

17076.37 |

| He |

63437.00 |

|

did |

16478.95 |

| him |

59625.23 |

|

cent |

16062.55 |

| She |

44947.76 |

|

thought |

14431.05 |

| which |

44663.73 |

|

looked |

13634.15 |

| I |

41916.52 |

|

two |

13221.31 |

| it |

40767.25 |

|

who |

13167.25 |

| in |

38769.57 |

|

man |

12667.63 |

| would |

32761.59 |

|

be |

12436.13 |

| but |

30400.01 |

|

went |

12395.98 |

| that |

28013.86 |

|

himself |

12214.06 |

| there |

24106.62 |

|

could |

12142.48 |

The appearance of the word

cent in Table 5 is interesting as it reflects the difference in

language variety bias between the two corpora (the US currency term

is lacking in the British National Corpus) but also the shift from the

orthographic form per cent to percent (percent

occurs high in the 'overused on the web' list with a log-likelihood

of 23021.23).

Other file formats

Tables 6 and 7 explore the

differences between file formats within the web crawl, HTML-PDF

in the former and HTML-PS in the latter. These tables raise several

noteworthy points, which we outline below together with our interpretations

based upon manual analysis of files from the crawl:

- Table 6 shows that

the pronouns you and I are overused in HTML (or underused

in PDF), whilst Table 7 shows an overuse of you (upper and lower

case) and your in HTML (underuse in PS). This indicates that

the first- and second-person interactive style found in HTML is less

prominent in PDF and PS files.

- The 'overused

in PDF' list (Table 6) reflects the fact that advisory notices (concerning

security vulnerabilities in computer software) are more common in PDF

format than in HTML, and that software licences, with terms & conditions

and frequent use of shall, also appear in PDF format [19].

Somewhat counter-intuitively, shall is overused on the web as

a whole when compared with the BNC (log-likelihood of 1058.43) as a

result of the widespread nature of such documents.

- The 'overused in PS' list in Table 7 reflects the large proportion of academic papers

(containing statistics, formulæ and technical discussions) in PS format.

- The underuse of

2006 in PDF (Table 6) and of 2005

and 2006 in PS (Table 7) could be taken as an indication that

the files in these formats on the web are less up-to-date than those

in HTML but, more likely, it reflects the fact that these formats contain

a large proportion of academic papers and, at the time of our crawl

in April 2006, authors were not yet citing 2006 or (to a lesser extent)

2005 papers in their work.

Table 6. HTML V PDF.

|

Overused in HTML |

|

Overused in PDF |

|

Word |

L-L |

|

Word |

L-L |

|

you |

36831.23 |

|

GeoTrust |

26458.65 |

| I |

21361.75 |

|

Certificate |

21079.45 |

| your |

19506.68 |

|

0 |

19641.68 |

| 2006 |

11968.13 |

|

1 |

17864.05 |

| page |

10167.13 |

|

emergency |

16295.69 |

| his |

9305.35 |

|

2 |

15438.40 |

| Posted |

9234.08 |

|

Advisory |

15265.31 |

| my |

8379.78 |

|

3 |

14748.91 |

| Reading |

8375.98 |

|

Issued |

13582.49 |

| said |

8103.42 |

|

responders |

13505.73 |

| he |

7991.45 |

|

vulnerability |

13352.54 |

| You |

7887.54 |

|

4 |

13307.65 |

| like |

7197.41 |

|

Category |

13027.63 |

| our |

6753.74 |

|

Security |

12935.81 |

| it |

6287.24 |

|

Consumer |

12803.71 |

| Continue |

6264.29 |

|

be |

12590.05 |

| More |

6245.99 |

|

shall |

12131.67 |

| get |

6241.96 |

|

Direct |

12004.83 |

| site |

5910.73 |

|

Subscriber |

11635.91 |

| just |

5870.55 |

|

malicious |

10690.44 |

Table 7. HTML V PS.

|

Overused in HTML |

|

Overused in PS |

|

Word |

L-L |

|

Word |

L-L |

|

you |

1402.59 |

|

2 |

3881.36 |

| your |

854.53 |

|

i |

3086.35 |

| 2006 |

277.35 |

|

algorithm |

2800.59 |

| You |

260.04 |

|

data |

2482.99 |

| 2005 |

254.84 |

|

X |

2439.30 |

| or |

250.55 |

|

algorithms |

2125.63 |

| he |

236.15 |

|

probability |

2107.77 |

| page |

235.14 |

|

P |

2029.43 |

| his |

227.30 |

|

Eunomia |

1782.19 |

| site |

217.60 |

|

x |

1698.64 |

| my |

201.38 |

|

0 |

1607.84 |

| who |

188.16 |

|

stereo |

1400.42 |

| at |

183.74 |

|

Berna |

1366.84 |

| and |

172.87 |

|

Gaussian |

1329.03 |

| about |

172.35 |

|

1 |

1171.39 |

| on |

166.07 |

|

f |

1057.23 |

| said |

164.98 |

|

image |

1042.24 |

| people |

161.14 |

|

statistical |

976.69 |

| business |

156.76 |

|

distributions |

937.70 |

| security |

149.69 |

|

model |

898.08 |

Analysis of these log-likelihood

statistics and a sample of the corresponding texts leads us to suggest

the existence of a continuum on the web, whereby HTML is the most heterogeneous

text format and PS the most homogenous (used mainly for academic and

technical discussions). PDF sits somewhere in the middle, containing

a wide range of texts, from academic papers to marketing material, but

without covering the full range that HTML does.

The preliminary experiments

discussed in this paper have confirmed that the web is a useful source

of linguistic data on a vast scale, given the right tools and an awareness

of its structure and composition. This awareness is particularly

important when dealing with HTML files, perhaps the least 'text-like'

format in a conventional sense. When using HTML files to build

a corpus, one needs to be aware that:

- many HTML files

contain boilerplate sections but the main content section can be extracted,

maintaining paragraph structure, by adopting a tree parser approach

to boilerplate removal. This approach has the added benefit that

it maintains paragraph boundaries and allows the insertion of full-stops to improve text-quality if required.

- HTML documents are

designed for ease of reading on screen and are often written in short

paragraphs, with a separate file for each sub-section.

Given that Ide et al. (2002)

considered only HTML files and did not take either of these factors

into account, it is not surprising when they conclude that the web

is not a source of the

range of written texts that readers frequently encounter. As such, web

texts lack the variety of linguistic features that can be found in many

texts. In addition, our data suggest that web texts differ from

much standard prose in their rhetorical structure: the average length

of a web "paragraph" is about 50 words, whereas [...] the average

paragraph length in this paper is well over 100 words.

In fact, Ide et al.'s paper

is now a 'web text' itself, available in PDF format, so their conclusion

does not hold if the notion of 'web text' is extended beyond a simple

study of HTML text. We have illustrated that, by considering files

in a variety of formats, it is possible to widen the definition of 'text'

on the web. File format can offer clues about textual domain and

the fact that different file formats on the web contain different kinds

of text will help with the building of our domain-specific sub-corpora,

allowing us to turn to PDF or PS files for academic papers, for instance.

Further work is required in this area and will form a later stage of

our Search Engine project. At the simplest level, however, it

is already possible to use factors such as sentence length, paragraph

length, number of paragraphs and frequent lexis to filter out spam and

other 'non-text' from the web corpus.

With the storage capacity and

processing power in place and work underway on the search interface,

the new WebCorp Linguist's Search Engine will be available soon at

http://www.webcorp.org.uk.

[1] A similar tool called KWiCFinder (Fletcher 2001) was developed subsequently.

Unlike WebCorp, this is a client-side rather than server-side application (i.e. it must

be installed on the user's PC) but it too suffers from reliance on commercial search

engines.

[2] Some linguists have attempted to use occurrence figures from Google in quantitative

studies (e.g. WebPhraseCount: Schmied 2006) but we have intentionally avoided this in

WebCorp because search engine frequency counts are notoriously unreliable (see, for

instance, Veronis 2005).

[3] Baroni, Sharoff and others are also part of the 'WaCky' project, which is aiming to assemble a suite of tools for the

building of corpora from the web.

[4] This is not a limitation - even when users search 'the whole web' through Google they

are, in fact, searching only Google's offline index of the web. Studies have long shown

that search engines index a small proportion of data available on the 'deep web'

(Bergman 2001).

[5] Cf. the two text-centred principles of 'textuality' laid out by de Beaugrande &

Dressler (1981): cohesion and coherence.

[6] When Fletcher (2004) refers to Ide et al.'s assertion that only 1-2% of web pages

meet both criteria, he gives the second criterion incorrectly as '30 paragraphs per

document' instead of 30 words per paragraph. However, Ide et al.'s paper is unclear,

as they do indeed appear to restrict documents to those containing '2000 or more words

and 30 or more paragraphs' in a later section.

[7] -2 is used for tags rather than -1 to account for the closing tags (e.g. </P>), which are not

included in the tree representation. This is an advantage as it means that we do not

have to rely on HTML mark-up being accurate and including opening and closing tags in

all cases.

[8] Notwithstanding the issue of boilerplate removal discussed in the previous

section.

[9] In this experiment, we used the Google index as an approximation of the 'whole

indexable web'. The exact queries used were "the" filetype:pdf, etc. This shows the number of

documents in the specified format containing the word the and, thus, offers an

approximation of the total number of documents in English in that format. Google uses filename extensions to determine filetype, so the

HTML figures shown are a combination of the filetype:htm, filetype:html,

filetype:shtm and filetype:shtml variations. The HTML figures are somewhat

conservative estimates as they do not include HTML documents with other extensions

(such as .asp) nor those documents with no file extension (e.g. http://rdues.bcu.ac.uk/

is a root URL which actually returns an HTML document). The other document formats

are not affected by such variations. We did not include other Google-supported formats

such as Microsoft PowerPoint in this experiment as we felt them less likely to contain

connected prose. The experiment was carried out in April 2006.

[10] Some formats are more difficult than others to convert into plain text. Postscript

can present problems and even commercial tools are not perfect - the 'View as HTML'

option in Google, for example, removes all occurrences of the characters 'if' and 'fi'

from the text of Postscript files!

[11] We use 'document' here to refer to an individual file from our test crawl, but see

the caveat in the next section for HTML documents.

[12] A possible shortcut would be to follow the 'Printable Version' link which appears

within multi-page (and, thus, multi-file) documents on some sites. Such printable

versions usually contain the whole document.

[13] For comparison, average sentence length in the BNC is around 22 tokens using our

tokeniser.

[14] Baroni (2005) notes the same problem and proposes the use of a list of banned words,

to be used to filter out pornographic spam.

[15] RTF format is excluded from this and subsequent tables as our crawl contained an

insufficient number of files in this format from which to draw significant conclusions.

[16] A sequence of two <BR> tags, the <DIV> tag (as seen in Figure 5), the <TABLE> tag, etc.

[17] The <PRE> tag is used to demarcate pre-formatted text, within which paragraph boundaries will

be marked by sequences of newline or carriage return characters.

[18] See also Rayson's online log-likelihood calculator.

[19] The appearance of the numbers 0-4 in this list are partly the result of problems

dealing with tables of data in the extraction of text from PDF files but they also

reflect the fact that, unlike in PDF, a numbered list in an HTML source file does

not actually contain the numbers. Instead it uses an <OL> tag to begin the list

and an <LI> tag for each item, leaving the rendering of the numbers to the web

browser.

AltaVista search engine,http://www.altavista.com [http://search.yahoo.com/?fr=altavista]

Antiword DOC format converter, http://www.winfield.demon.nl

Body Text Extraction (BTE) program by Aidan Finn, http://www.aidanf.net/posts/bte-body-text-extraction

British National Corpus (BNC), http://www.natcorp.ox.ac.uk

Google search engine, http://www.google.com

KWiCFinder Web Concordancer & Online Research Tool, http://www.kwicfinder.com/KWiCFinder.html

Log-likelihood calculator by Paul Rayson, http://ucrel.lancs.ac.uk/llwizard.html

Research and Development Unit for English Studies (RDUES), http://rdues.bcu.ac.uk

Test crawl:

Tools for post processing downloaded text by Marco Baroni, http://sslmitdev-online.sslmit.unibo.it/wac/post_processing.php

'WaCky' project, http://wacky.sslmit.unibo.it

WebCorp: The Web as Corpus, http://www.webcorp.org.uk

WebCorp Linguist's Search Engine, http://www.webcorp.org.uk/lse

(All URLs last checked 10 March 2016)

Baroni, M. 2005. "Large

Crawls of the Web for Linguistic Purposes". Workshop paper presented at Corpus

Linguistics 2005, Birmingham. http://sslmit.unibo.it/~baroni/wac/crawl.slides.pdf

Baroni, M. & S. Bernardini. 2004. "BootCaT: Bootstrapping corpora and terms from the Web". Proceedings of LREC 2004, ed. by M.T. Lino, M.F. Xavier, F. Ferreira, R. Costa & R. Silva, 1313-1316. Lisbon: ELDA. http://sslmit.unibo.it/~baroni/publications/lrec2004/bootcat_lrec_2004.pdf

de Beaugrande, R. & W. Dressler.

1981. Introduction to Text Linguistics. London: Longman. http://beaugrande.com/introduction_to_text_linguistics.htm

Bergh, G., A. Seppänen &

J. Trotta. 1998. "Language Corpora and the Internet: A joint linguistic

resource". Explorations in Corpus Linguistics,

ed. by A. Renouf, 41-54. Amsterdam/Atlanta: Rodopi.

Bergman, M.K. 2001. "The

Deep Web: Surfacing Hidden Value". The Journal of Electronic

Publishing 7(1): http://quod.lib.umich.edu/j/jep/3336451.0007.104?view=text;rgn=main

Brin, S. & L. Page. 1998. "The Anatomy of a Large-Scale Hypertextual Web Search Engine". Computer Networks and ISDN Systems 30(1-7): 107-117. http://infolab.stanford.edu/pub/papers/google.pdf

Cavaglia, G. & A. Kilgarriff.

2001. Corpora from the Web. Information Technology Research Institute Technical Report Series (ITRI-01-06): ITRI, University of Brighton.

https://www.kilgarriff.co.uk/Publications/2001-CavagliaKilg-CLUK.pdf

Fletcher, W. 2001. Concordancing

the Web with KWiCFinder. This paper was prepared for American Association for Applied Corpus Linguistics

Third North American Symposium on Corpus Linguistics and Language Teaching, Boston, MA, 23-25 March 2001 but the author notes that "This rough draft was originally submitted for publication in a volume of selected papers from the conference, a project apparently has been abandoned." http://kwicfinder.com/FletcherCLLT2001.pdf

Fletcher, W. 2004. "Making

the Web More Useful as a Source for Linguistic Corpora". Applied Corpus Linguistics: A Multidimensional Perspective, ed. by U. Connor

& T. Upton, 191-205. Amsterdam: Rodopi. http://kwicfinder.com/AAACL2002whf.pdf

Ghani, R., R. Jones & D.

Mladenic. 2003. "Building minority language corpora by learning to

generate Web search queries". Knowledge and Information Systems

7(1): 56-83.

Ide, N., R. Reppen & K.

Suderman. 2002. "The American National Corpus: More Than the Web Can

Provide". Proceedings of the 3rd Language

Resources and Evaluation Conference (LREC), Canary Islands. Paris: ELRA. http://www.cs.vassar.edu/~ide/papers/anc-lrec02.pdf

Kehoe, A. 2006. "Diachronic

linguistic analysis on the web with WebCorp". The Changing Face of Corpus Linguistics, ed. by A. Renouf & A. Kehoe, 297-307. Amsterdam/New

York: Rodopi. http://rdues.bcu.ac.uk/publ/AJK_Diachronic_WebCorp_DRAFT.pdf

Rayson, P. & R. Garside. 2000.

"Comparing corpora using frequency profiling". Proceedings

of the workshop on Comparing Corpora, held in conjunction with the 38th

annual meeting of the Association for Computational Linguistics (ACL

2000), Hong Kong, ed. by A. Kilgarriff & T. Berber Sardinha. New Brunswick: Association for Computational Linguistics. http://ucrel.lancs.ac.uk/people/paul/publications/rg_acl2000.pdf

Schmied, J. 2006. "New ways

of analysing ESL on the www with WebCorp and WebPhraseCount". The Changing Face of Corpus Linguistics, ed. by A.

Renouf & A. Kehoe, 309-324. Amsterdam: Rodopi.

Sharoff, S. 2006. "Creating

General-Purpose Corpora Using Automated Search Engine Queries". Wacky! Working Papers on the

Web as Corpus, ed. by M. Baroni & S. Bernardini. Bologna: GEDIT. http://wackybook.sslmit.unibo.it/pdfs/sharoff.pdf

Veronis, J. 2005. "Google's

missing pages: mystery solved?".

Blog entry. http://blog.veronis.fr/2005/02/web-googles-missing-pages-mystery.html

|